Introduction to Agents using Azure AI Foundry

code-first app using Azure agent service

Before learning and implementing agents in the .NET ecosystem, let’s understand some basics. I suggest reading this to get some understanding of ML.Net, just for an idea to understand some basics of LLMs as well

The Foundation: What is a Large Language Model (LLM)?

Before we get to the future, let’s ground ourselves in the present. At its core, an LLM is a sophisticated text-prediction engine.

Think of it as an incredibly well-read consultant. It has consumed a vast portion of the internet, books, and articles. You can ask it to:

Generate content (write a blog post, a marketing email, or a programming language script).

Summarize a long document.

Translate between languages.

Answer a complex question.

You give it a prompt (input), and it gives you a response (output).

But here’s the key limitation: An LLM is passive. It can’t do anything outside of its text box. It can tell you how to book a flight, but it can’t open a browser, go to the airline’s website, and actually book it for you. It’s all talk, no action.

The Evolution: Meet the AI Agent

An AI Agent is what you get when you take that brilliant LLM “brain” and build an autonomous system around it. An agent isn’t just a responder; it’s a doer.

If an LLM is a consultant who gives you a plan, an AI Agent is the project manager who takes your goal and executes the entire plan, end-to-end.

An agent is typically composed of four key parts:

The LLM (The “Brain”): The agent uses the LLM for its core reasoning, planning, and decision-making. “The user wants to book a flight. What’s the first step?”

Tools (The “Hands”): This is the game-changer. An agent has access to a set of tools (like APIs) that allow it to interact with the real world. These tools can include:

Web or file search.

Code execution (to run a script).

Database access (to check your stored preferences).

APIs (to connect to your calendar, your email, or the airline’s booking system).

Planning & Task Decomposition: When you give an agent a complex goal (e.g., “Plan my weekend trip to Cape Town”), it uses its LLM brain to break that goal down into a series of steps:

Step 1: Search for flights to Cape Town for this Friday.

Step 2: Check my calendar for conflicts.

Step 3: Find top-rated hotels near the waterfront.

Step 4: Present the top 3 options to the user.

Step 5: Await approval and then book the chosen option.

Memory (The “Scratchpad”): An agent can remember the results of its previous actions. It can learn from its mistakes and self-correct. If a search fails, it can try a different query. This “inner monologue” or “chain of thought” allows it to handle multi-step tasks without human intervention.

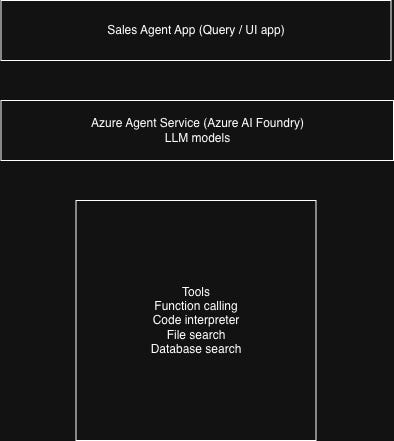

In this post, we will explore and implement a code-first ‘Sales Agent’ designed to analyze sales data. This is an LLM-powered agent, built using the Semantic Kernel framework, which means it uses an LLM to process requests. It connects to external tools like databases, hosted files, and a code interpreter to generate charts.

Here is the high-level workflow for the LLM-powered ‘Sales Agent’.

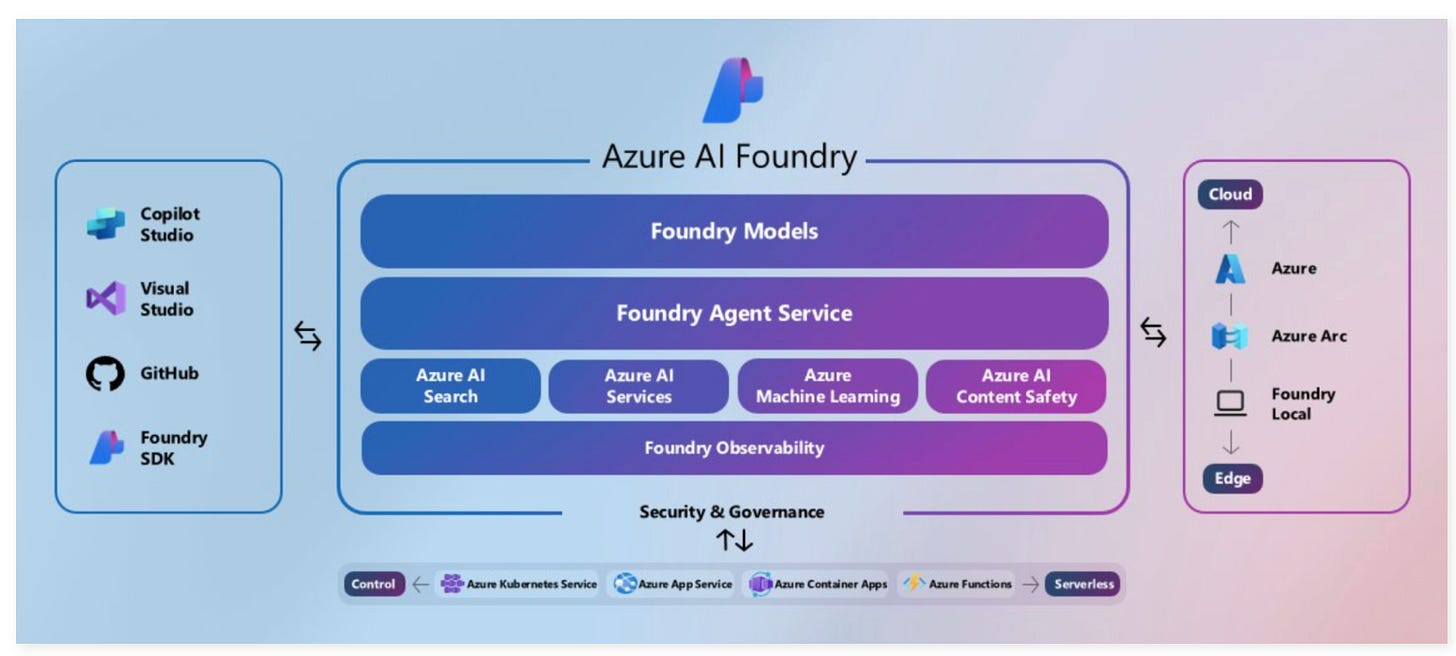

Now that we have a foundational understanding of what LLMs and agents are, the next logical step is orchestration. To effectively orchestrate and maintain these agents, a dedicated platform is required. This is precisely where Azure AI Foundry comes into the picture.

Azure AI Foundry is a managed service from Microsoft on the Azure cloud designed to handle the entire agent lifecycle. It provides essential capabilities, such as:

Rapid deployments and automation

Secure data connections

Flexible model selection

Enterprise-grade security

What is Semantic Kernel? Your AI Orchestra Conductor

If you’re a developer looking to build sophisticated AI applications, you’ve probably hit a wall. Large Language Models (LLMs) like those from Azure OpenAI are incredibly powerful at understanding and generating language, but they live in their own world. They can’t browse your company’s database, send an email, or call your existing C# functions.

This is where Semantic Kernel framework (SK) comes in.

Think of Semantic Kernel as an AI orchestra conductor. Your LLM (like GPT-4) is the star musician—brilliant, but it only knows how to play its instrument (language). Your traditional code (like your .NET business logic, APIs, and databases) are the other musicians in the orchestra, each with a specific skill.

Semantic Kernel is the conductor that hands the right sheet music (the prompt) to the LLM and coordinates it with the other musicians (your code) to create a complex, harmonious piece of music (a useful application).

It is an open-source SDK from Microsoft that acts as a lightweight orchestration layer, allowing you to seamlessly blend AI with conventional programming languages like C#, Python, and Java.

In short:

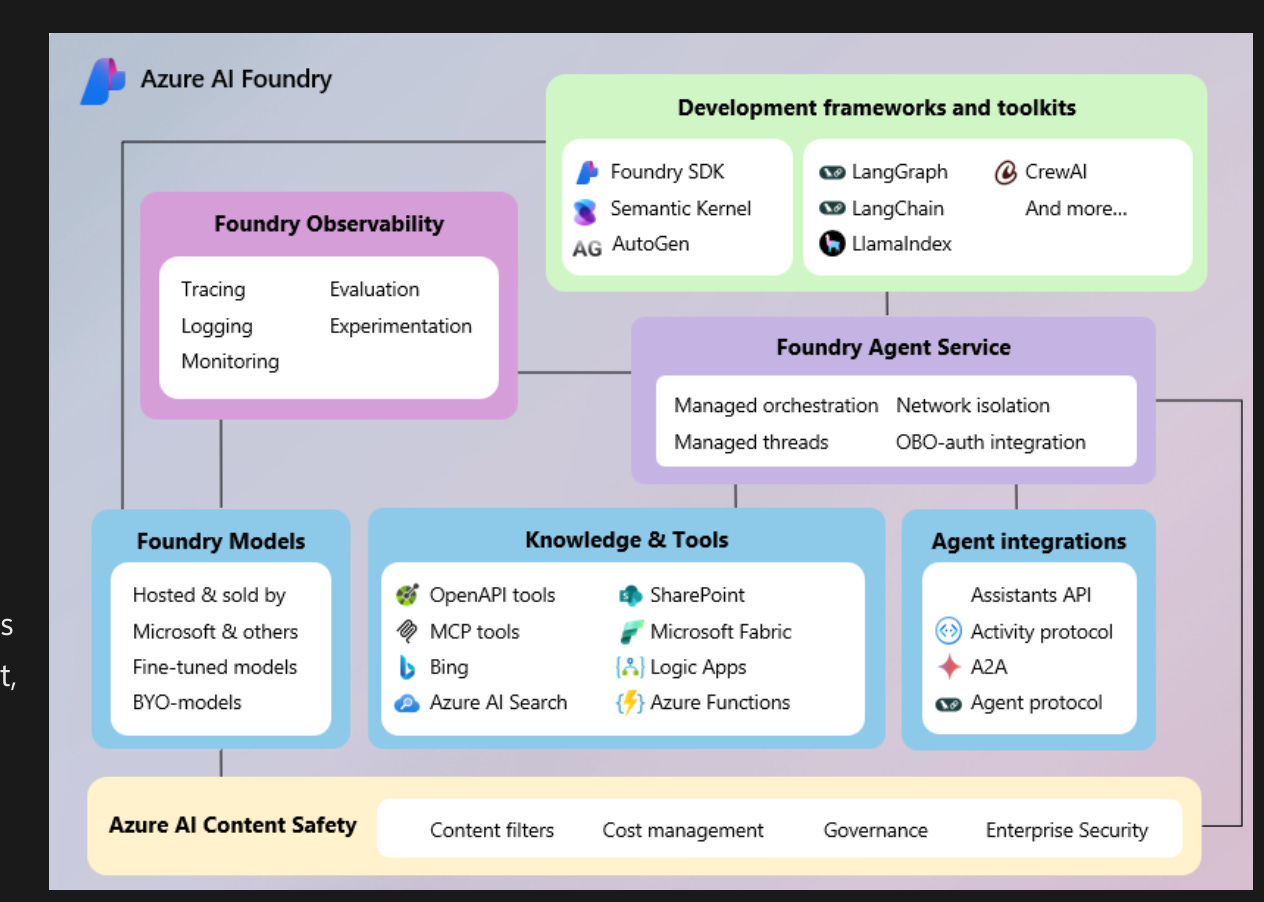

Semantic Kernel is the open-source SDK (the “how-to”) for building AI agents.

Azure AI Studio / Foundry is the managed platform (the “where-to”) to deploy, run, and scale those agents in the cloud.

For example, the framework provides a specific AzureAIAgent class. This agent is designed to run within the Azure AI service, seamlessly connecting to your Azure AI Search for data, using your Azure OpenAI models, and automatically handling things like conversation history and tool (plugin) execution.

Traditional applications operate on predefined logic. When you pass instructions, the application follows a fixed path to produce a result that is already planned and structured. The output is predictable because the entire process is hard-coded.

In contrast, LLM-powered agents operate dynamically. When you provide instructions and context, the agent itself intelligently determines the best way to handle the task, rather than following a rigid script. This demonstrates their power and speed, as they can significantly reduce manual effort by adapting to the request. It’s a much more intelligent and flexible approach.

Azure AI Foundry is designed to change that. It’s a platform that combines models, tools, frameworks, and governance into a unified system for building intelligent agents. At the center of this system is Azure AI Foundry Agent Service, enabling the operation of agents across development, deployment, and production.

Let’s jump to coding part :

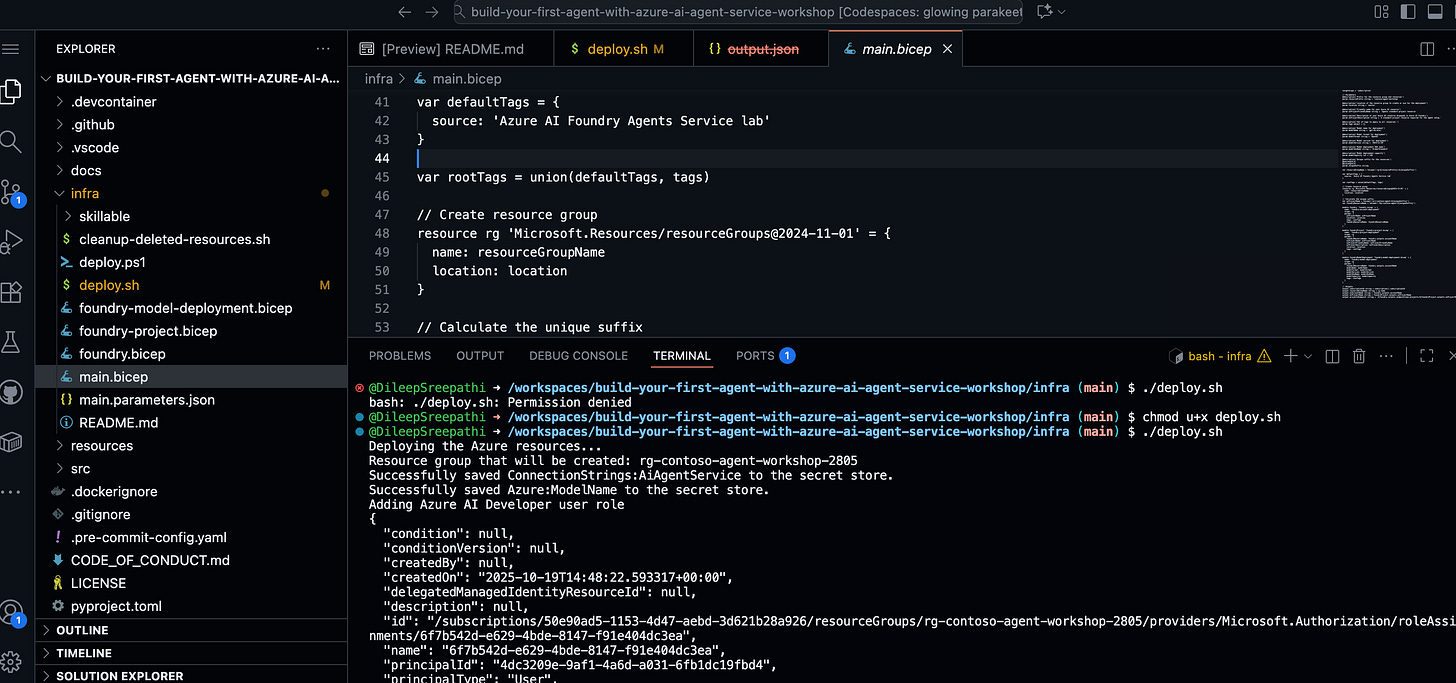

Github Link : https://github.com/DileepSreepathi/sales-agent-workshop

Resources setup: once the project is cloned , try to set up the resources that are required in this project. The required resources are created in the Azure cloud by running the below command.

./deploy.sh

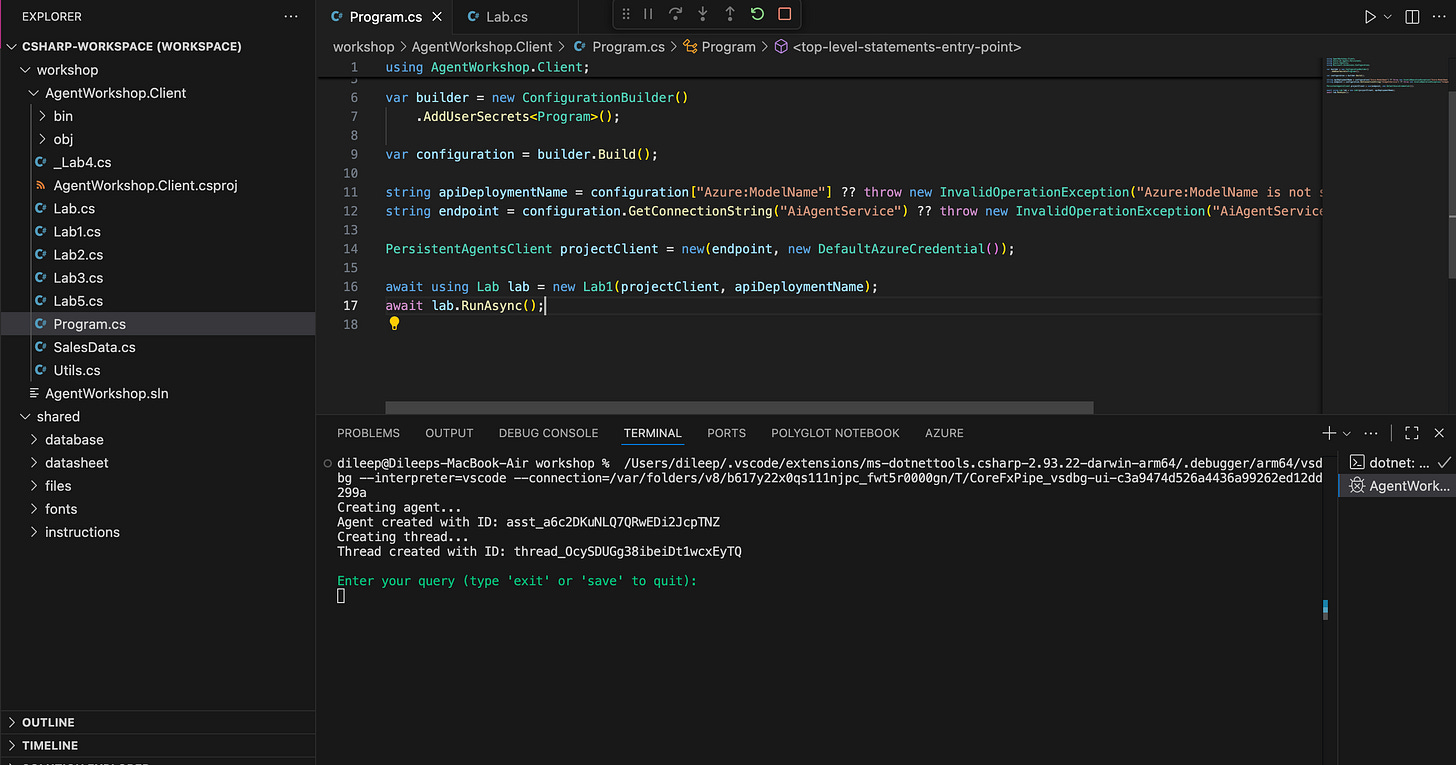

After the Azure resources are deployed, open the project workspace in VS Code and run the application (e.g., by typing dotnet run in the terminal). This will execute the Program.cs entry point, which runs the Lab1.cs code to create the agent. The agent then uses an LLM to process the input prompt, generate a query, and connect to the database to fetch the results. each agent is created under each separate thread task.

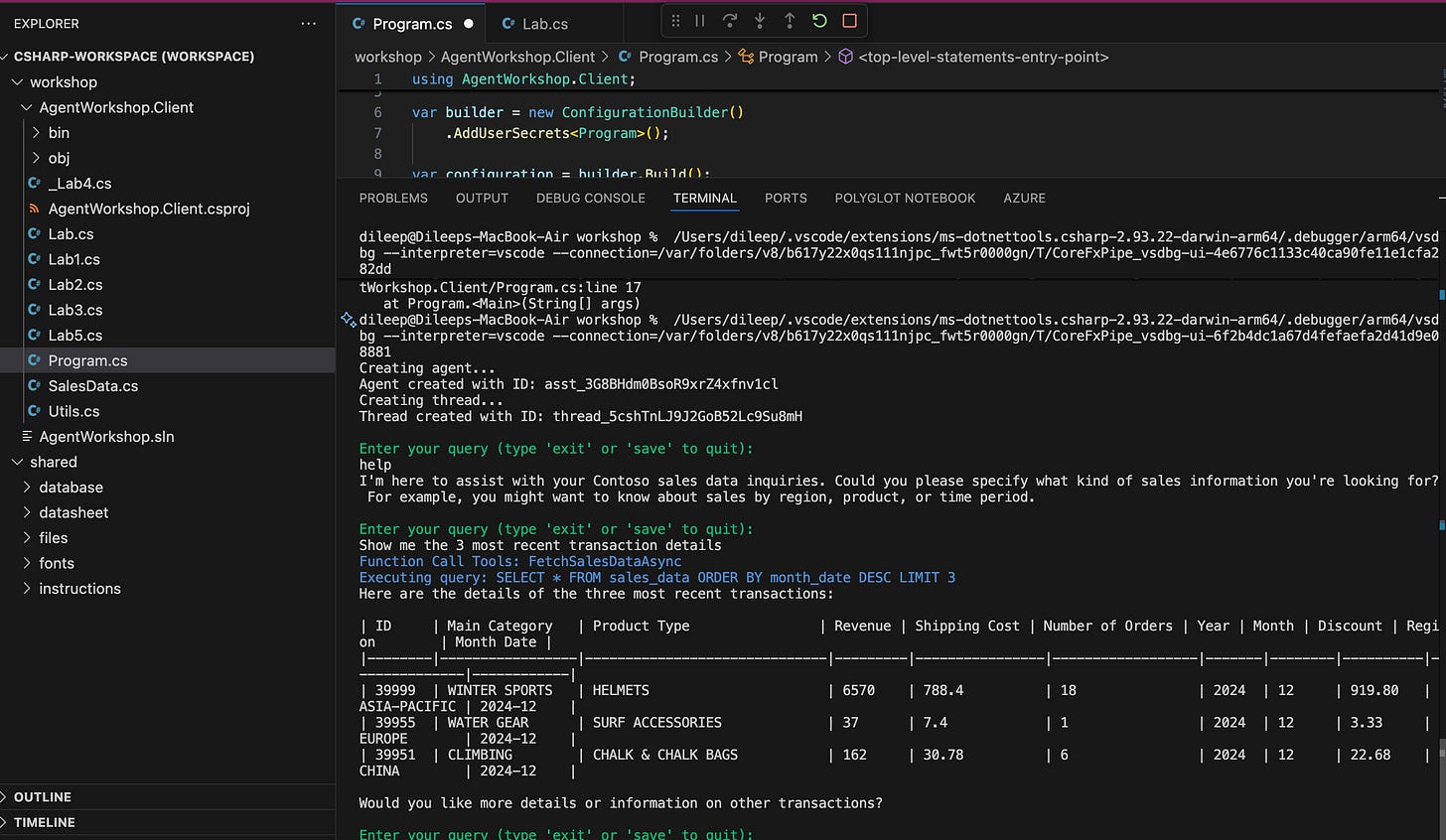

Function calling enables Large Language Models to interact with external systems. The LLM determines when to invoke a function based on instructions, function definitions, and user prompts. The LLM then returns structured data that can be used by the agent app to invoke a function.

Dynamic SQL Generation

When the app starts, it incorporates the database schema and key data into the instructions for the Foundry Agent Service. Using this input, the LLM generates SQLite-compatible SQL queries to respond to user requests expressed in natural language.

From a security perspective, we must address the risk of SQL injection. Because the LLM generates queries dynamically, a malicious prompt could attempt to create a query that modifies or destroys data. Therefore, a critical best practice is to grant the database connection read-only permissions. This mitigates the risk by ensuring that even a successful injection attack cannot alter or delete data.

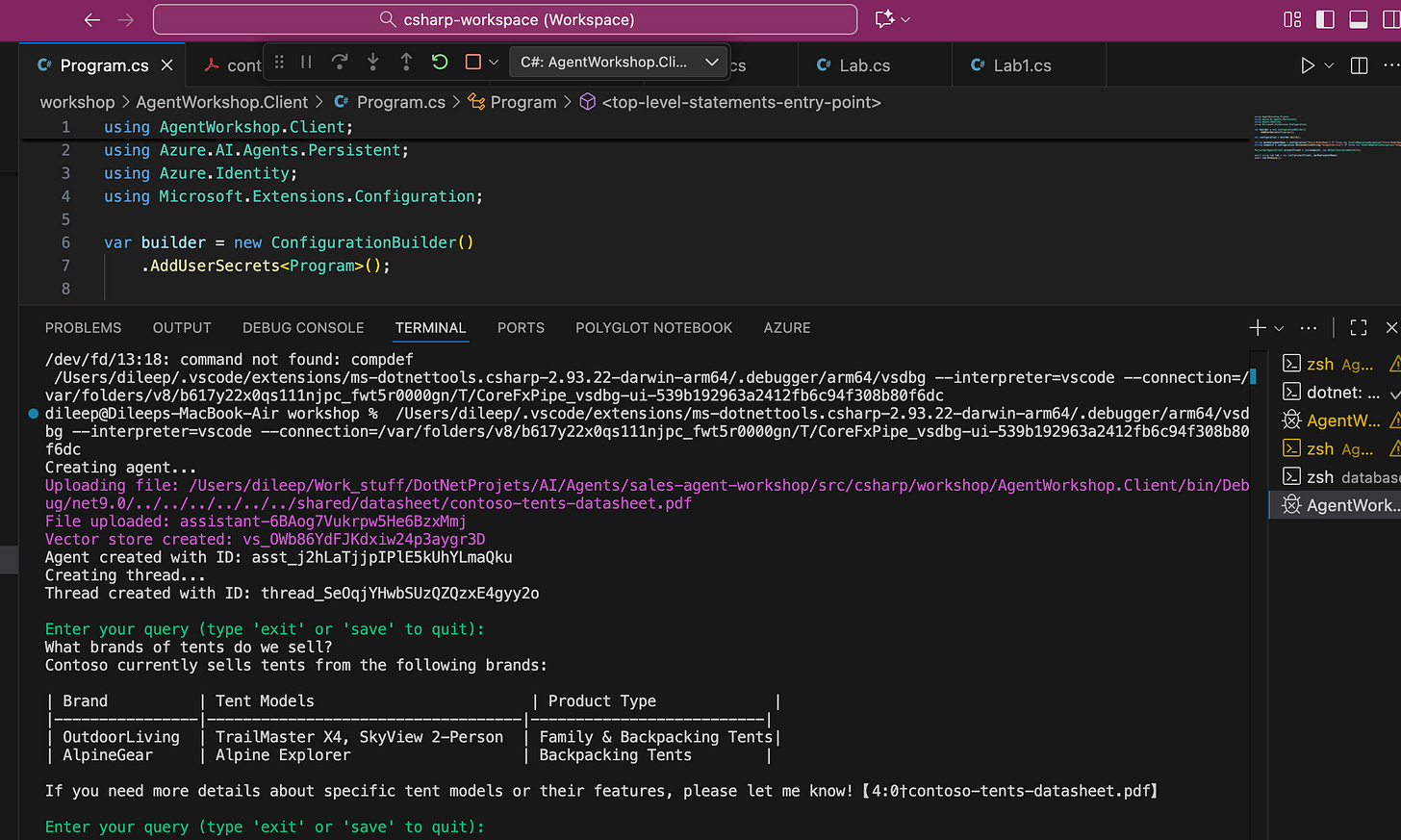

Lab 2 demonstrates how to query data using an LLM model and the file search tool. The file used for the search is uploaded at runtime

Grounding a conversation with documents is highly effective, especially for retrieving product details that may not be available in an operational database. The Foundry Agent Service includes a File Search tool, which enables agents to retrieve information directly from uploaded files, such as user-supplied documents or product data, enabling a RAG-style search experience.

When the app starts, a vector store is created, the sales datasheet PDF file is uploaded to the vector store, and it is made available to the agent.

Other tools like code interpreter and multilingual visualizations are present in Lab 3 and Lab 4, which can be referred at -

The sales agent is a hackathon project from the Microsoft agent hackathon tutorials.

I hope this post helped you understand how .NET developers can use agents in this space. If you found this article helpful, please consider following me for more content.